Pipeliners Mac OS

- Pipeliners of America, Batesville, Mississippi. 67,113 likes 112 talking about this 169 were here. #POA has set out on a mission to show the world how the Pipeliners Of America build our economy.

- The last classic version of the game (2.2.7) was released in early 1999. The current version of MacPipes requires a PowerPC processor and runs on Mac OS 8.6/9.x with CarbonLib and natively on Mac.

Reallusion CrazyTalk Animator 3.2.2029.1 Pipeline (Mac OS X) 533 MB. CrazyTalk Animator (CTA) is the world's easiest 2D animation software that enables all levels of users to create professional animations with the least amount of effort. With CTA3, anyone can instantly bring an image, logo, or prop to life by applying bouncy Elastic Motion. Apr 14, 2021 Two major factors motivate the introduction of a new Metal-based rendering pipeline on macOS: Apple deprecated the OpenGL rendering library in macOS 10.14, in September 2018. Java 2D on macOS is completely reliant on OpenGL for its internal rendering pipeline, so a new pipeline implementation is needed. Apple claims that the Metal framework, their replacement for OpenGL, has superior performance.

Authoring YAML pipelines on Azure DevOps often tends to be repetitive and cumbersome. That repetition might happen at the tasks level, jobs level or stages level. If we do coding, we do refactoring those repetitive lines. Can we do such refactoring the pipelines? Of course, we can. Throughout this post, I'm going to discuss where the refactoring points are taken.

The YAML pipeline used for this post can be found at this repository.

Build Pipeline without Refactoring

First of all, let's build a typical pipeline without being refactored. It is a simple build stage, which contains a single job that includes one task.

Pipeliners Mac Os Catalina

Here's the result after running this pipeline. Nothing is special here.

Let's refactor this pipeline. We use template for refactoring. According to this document, we can do templating at least three places – Steps, Jobs and Stages.

Refactoring Build Pipeline at the Steps Level

Let's say that we're building a node.js based application. A typical build order can be:

- Install node.js and npm package

- Restore npm packages

- Build application

- Test application

- Generate artifact

In most cases, Step 5 can be extra, but the steps 1-4 are almost identical and repetitive. If so, why not grouping them and making one template? From this perspective, we do refactoring at the Steps level. If we need step 5, then we can add it after running the template.

Now, let's extract the steps from the above pipeline. The original pipeline has the template field under the steps field. Extra parameters field is added to pass values from the parent pipeline to the refactored template.

The refactored template declares both parameters and steps. As mentioned above, the parameters attribute gets values passed from the parent pipeline.

After refactoring the original pipeline, let's run it. Can you see the value passed from the parent pipeline to the steps template?

Now, we're all good at the Steps level refactoring.

Refactoring Build Pipeline at the Jobs Level

This time, let's do the same at the Jobs level. Refactoring at the Steps level lets us group common tasks while doing at the Jobs level deals with a bigger chunk. At the Jobs level refactoring, we're able to handle a build agent. All tasks under the steps are fixed when we call the Jobs level template.

Of course, if we use some advanced template expressions, we can control tasks.

Let's update the original pipeline at the Jobs level.

Then create the template-jobs-build.yaml file that declares the Jobs level template.

Once we run the pipeline, we can figure out what can be parameterised. As we set up the build agent OS to Windows Server 2016, the pipeline shows the log like:

Refactoring Build Pipeline at the Stages Level

This time, let's refactor the pipeline at the Stages level. One stage can have multiple jobs at the same time or one after the other. If there are common tasks at the Jobs level, we can refactor them at the Jobs level, but if there are common jobs, then the stage itself can be refactored. The following parent pipeline calls the stage template with parameters.

The stage template might look like the code below. Can you see the build agent OS and other values passed through parameters?

Let's run the refactored pipeline. Based on the parameter, the build agent has set to Ubuntu 16.04.

Refactoring Build Pipeline with Nested Templates

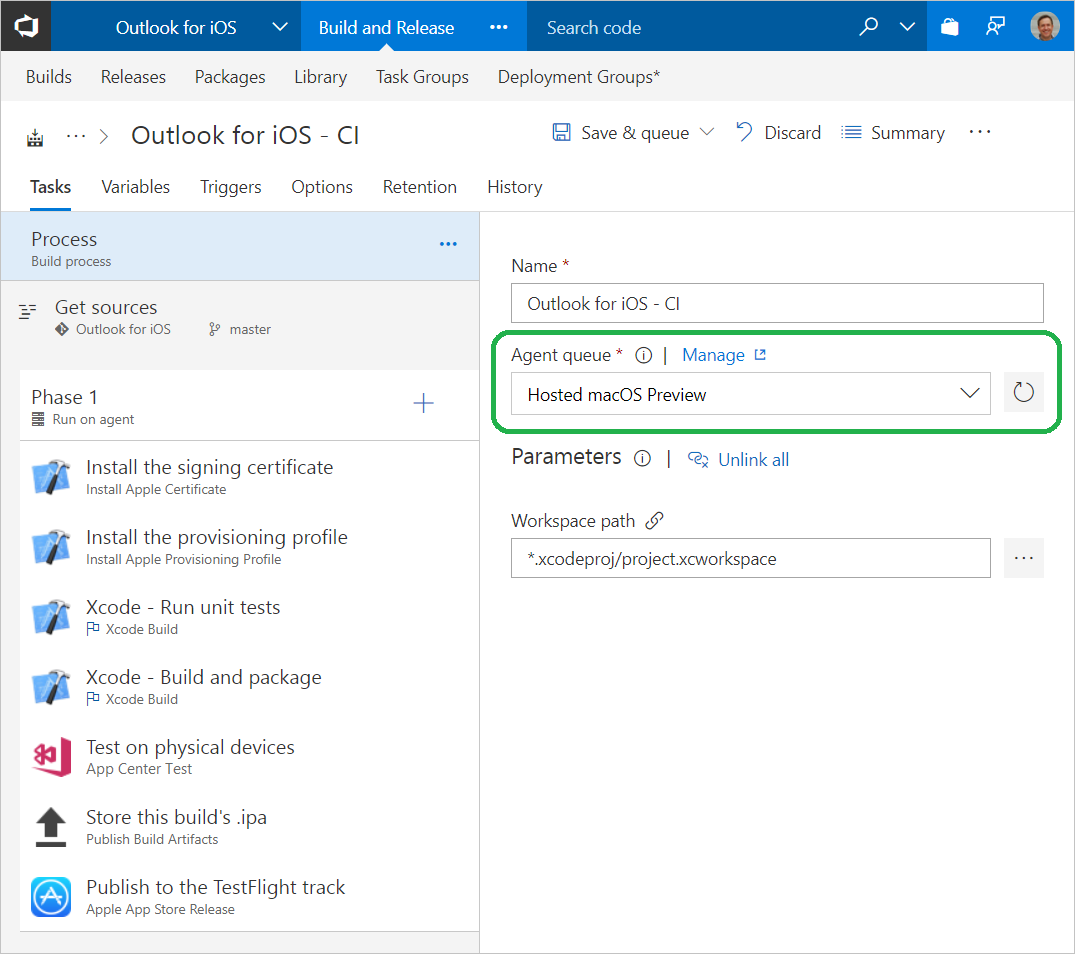

We've refactored in at three different levels. It seems that we might be able to put them all together. Let's try it. The following pipeline passes Mac OS as the build agent.

The parent pipeline calls the nested pipeline at the Stages level. Inside the nested template, it again calls another template at the Jobs level.

Pipeliners Mac Os Download

Here's the nested template at the Jobs level. It calls the existing template at the Steps level.

This nested pipeline works perfectly.

The build pipeline has been refactored at different levels. Let's move onto the release pipeline.

Release Pipeline without Refactoring

It's not that different from the build pipeline. It uses the deployment job instead of job. The typical release pipeline without using a template might look like:

Can you find out the Jobs level uses the deployment job? Here's the pipeline run result.

Like the build pipeline, the release pipeline can also refactor at the three levels – Steps, Jobs and Stages. As there's no difference between build and release, I'm going just to show the refactored templates.

Refactoring Release Pipeline at the Steps Level

The easiest and simplest refactoring is happening at the Steps level. Here's the parent pipeline.

And this is the Steps template. There's no structure different from the one at the build pipeline.

This is the pipeline run result.

Refactoring Release Pipeline at the Jobs Level

This is the release pipeline refactoring at the Jobs level.

The refactored template looks like the one below. Each deployment job contains the environment field, which can also be parameterised.

Refactoring Release Pipeline at the Stages Level

As the refactoring process is the same, I'm just showing the result here:

Refactoring Release Pipeline with Nested Templates

Of course, we can compose the release pipeline with nested templates.

So far, we've completed refactoring at the Stages, Jobs and Steps levels by using templates. There must be a situation to use refactoring due to the nature of repetition. Therefore, this template approach should be considered, but it really depends on which level the refactoring template goes in because every circumstance is different.

However, there's one thing to consider. Try to create templates as simple as possible. It doesn't really matter the depth or level. The template expressions are rich enough to use advanced technics like conditions and iterations. But it doesn't mean we should use this. When to use templates, the first one should be small and simple, then make them better and more complex. The multi-stage pipeline feature is outstanding, although it's still in public preview. It would be even better with these refactoring technics.

Software companies grow up fast, and that growth fuels the need for infrastructure that can support a product at scale. As a result, enterprise level CI generally comes on the heels of other milestones. This is natural – particularly in the notoriously tricky world of Mac-based CI. Not to mention the fact that local Mac mini 'farms,' which seem to be the default stepping off point for macOS and iOS CI, can grow to be quite large and still be very functional.

But at some point, most companies grow beyond their rudimentary build setup. They suffer major headaches and productivity loss. They make adjustments, but hit an internal, operational ceiling. Then, probably a single engineer is tasked with fixing it. At that point, said engineer pokes around online for a viable solution.

As a case in point, MacStadium hosted a panel discussion at AltConf 2019 (during Apple's annual WWDC) that focused on CI best practices. Top DevOps engineers from the likes of Pandora, Aspyr Media, and PSPDFKit were kind enough to share their insights on the matter.

Over the course of the discussion, one thing became abundantly clear: developing a viable CI system is nothing short of an organic process – often an afterthought upon which an entire company’s profitability can hinge. That is, at some point, CI emerges as being massively important to a company’s bottom line, but by the time that happens, a wide variety of factors will likely already be in play.

If that 'coming of age' story sounds familiar, you're in the right place, and MacStadium is here to help.

In the time that we've spent working with all manor of software companies, a variety of factors combine to shape the best path forward for a given company in this position. Understanding what those factors are, and where your company falls on a continuum made up of these factors, will ease the pain of making this transition.

Project Scale:

The scale of the project itself will almost certainly influence your CI system selections. In the most direct sense, the size of your codebase and frequency with which you kick off builds will determine your needs. But the beauty of moving up to an enterprise level system is that you have the freedom to make selections that do more than simply cover the bases. This is an opportunity to improve the work lives of colleagues. But in order to do so, it will help to think about team fit as much as you think about the technical integrations of the various components in your new CI pipeline on paper.

Real Cost of CI Delays:

Ultimately, you've been tasked with finding the greatest value that you can within the bounds of your current situation. It’s an all too common theme with young, fast-moving tech companies -- “We didn’t realize how much it was costing us, because we just didn’t have the resources to track it.” – Confucius

While a given company's internal variables are far too great for us to pin this down exactly, the following nearly always apply to the total cost of CI delays:

- Idle developers

- A break in focus for a developer who is mid-problem

- Delay to delivery of the product

- Average build time

- Number of builds in a given time frame

Whatever figure you come to after considering the above, less the cost of the new CI system and the team that is required to manage it, represents your total potential savings.

Existing Infrastructure:

To retool or not to retool? That is the question. The answer may lie in your existing infrastructure. For example, Chef and Puppet are both Ruby-based languages, but with a different focus. Chef is designed with VMs as a primary use case, while Puppet deploys better to legacy infrastructure. They cover the same functionality, having a central server ensure that subordinate machines are up-to-date with the latest patches while not crashing the network, but the choice between these two will probably be driven by the existing hardware.

Existing Codebase:

Although CI is theoretically language agnostic, there are certainly patterns related to CI tool selection and a project's existing codebase. We see these first-hand as we aid engineers in settling on the best fit for their organization. To continue the example above, Ansible has had a massive adoption spike over Chef and Puppet in recent years by teams without Ruby experience. The YAML approach makes it easy to learn and adopt, even though most agree it is not quite as good as Chef or Puppet — the sacrifice in features is worth the reduced technical debt.

Prerequisites

Team Culture:

Smaller teams may favor self-managed, open-source solutions to a point, and then need to retool when personnel limitations are reached. For example, over the course of our panel discussion with proven DevOps superstars, Jenkins was rightly described as being both 'not scalable past a certain point' and 'scalable to a massive size.' These ostensibly conflicting perceptions of the same tool are really the product of differing team cultures, talent bases, use cases, and budgets. CI is meant to be a liberating force for development teams, so a good fit in terms of team culture both in the current moment, and hopefully well into the future, is essential.

Further, some teams value a pure open-source product, and won't consider solutions such as Buildkite or Bamboo, fearing vendor lock. For these teams, the investment in getting a Jenkins-type solution scalable for their use-case is time well spent. Others view the net gain in time-to-market that a paid service offers, being scalable out of the box, to be well worth the cost up front.

That said, for those teams that opt for an open-source solution, you certainly won't be in uncharted waters. Prime examples of this:

- Microsoft maintains an extensive library of Chef scripts for updating macOS images

- Codebase is a driver of automation tool selection

- Dropbox can be used for hosting compiled builds

- Automating builds is possible with Slack bot

- Community opinions on built-in tool (such as Spotlight, which ought to be fully or partially disabled)

TLDR Summary:

Team culture is a major piece of the puzzle. So is pinning down the real cost of CI delays and malfunctions. Still though, there is no single 'right' answer. And ultimately, MacStadium is ready to help with the journey, as teaming up with a datacenter with scalable infrastructure is the first logical step to a mature, enterprise-grade Apple CI system.